Migration of a banking microservice solution from

AWS to Oracle Cloud Infrastructure

Customer story

About the project

Our client, Finshape Hungary Ltd., provides a banking service called Money Stories, originally hosted on AWS. Due to a new partnership with a company already contracted with Oracle, and the absence of an available region in the relevant country/area, Finshape opted to migrate their stack to Oracle Cloud to meet their customer’s needs.

However, this required in-depth knowledge of both Cloud Native technologies and Oracle Cloud Infrastructure. This is where Datatronic Ltd. entered the equation.

However, this required in-depth knowledge of both Cloud Native technologies and Oracle Cloud Infrastructure. This is where Datatronic Ltd. entered the equation.

How does the AWS architecture look like?

The architecture was already microservice-based, with Finshape possessing a comprehensive AWS solution comprising EKS, a CI/CD pipeline, OAuth 2.0, databases, load balancers, and S3 buckets, among other components.

Our objective was to execute this migration as smoothly and expeditiously as possible. We needed to identify alternatives for the AWS services within Oracle Cloud and collaborate with developers to replicate integration solutions.

Our objective was to execute this migration as smoothly and expeditiously as possible. We needed to identify alternatives for the AWS services within Oracle Cloud and collaborate with developers to replicate integration solutions.

Cloud agnostic design

Developing a cloud-agnostic solution can be arduous (and at times uneconomical), making it preferable to utilize cloud-based services over open-source ones due to their maintenance, support, and security features provided by the cloud provider. Given this, Finshape had already heavily utilized AWS-based services.

Architecture in Oracle Cloud Infrastructure

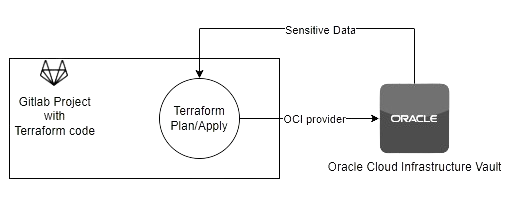

IaC with Terraform

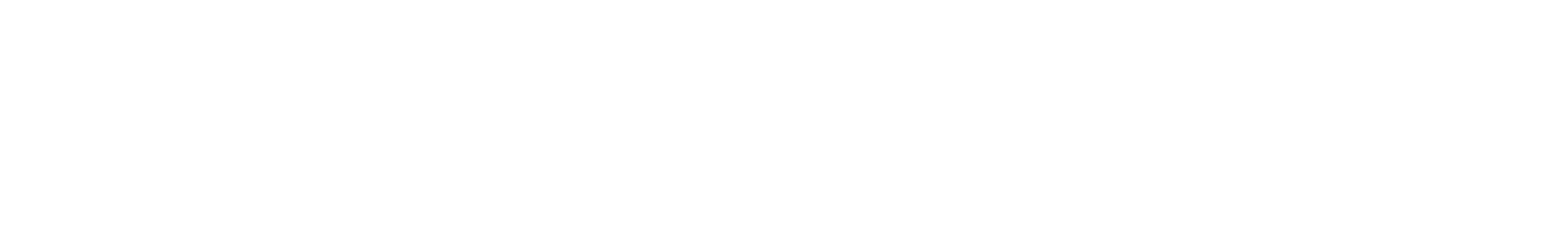

Our infrastructure within Oracle Cloud is meticulously managed using Terraform in adherence to Infrastructure as Code (IaC) principles. Execution of code is orchestrated through CI/CD pipelines, with resources instantiated using the OCI provider. Whenever a change is made and merged into a specific branch, a CI/CD pipeline initiates. The pipeline comprises three pivotal stages:

- Validate: During the validation phase, both “terraform init” and “terraform validate” commands are executed. The “init” phase involves downloading every provider plug-in to the runner, followed by the “validate” phase, which scrutinizes the syntax of the HCL code.

- Plan: At this juncture, the pipeline generates a plan, providing developers with insights into the forthcoming alterations in the infrastructure (including actions like destroy, upgrade, and create). This phase updates the Terraform state based on the remote cloud components, enforcing the desired specifications defined in the code onto the cloud resources.

- Apply: This stage requires a manual action, enabling developers to decide whether to apply the plan generated in the preceding “plan” job or to modify it to avoid the creation, update, or deletion of unwanted resources. Subsequently, the actual changes are reflected on the remote cloud provider’s side.

Where is the architecture located, and how are its components separated?

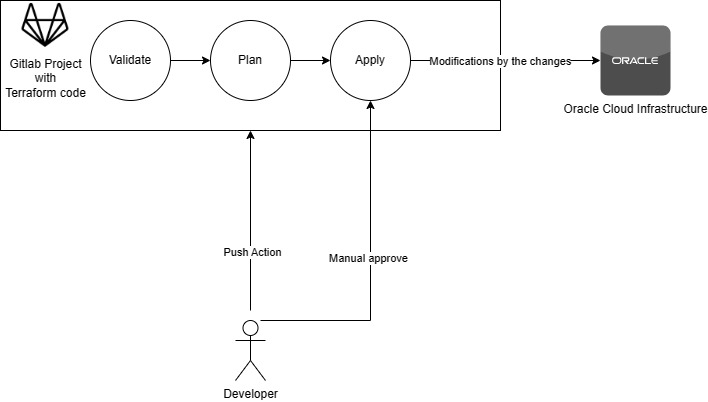

The infrastructure resides within Finshape’s customer tenancy, with resources securely compartmentalized within a Virtual Cloud Network (VCN). Each resource type is allocated its own subnet to segregate traffic.

A tenancy serves as a secure and isolated segment of the Oracle Cloud Infrastructure for creating, organizing, and managing various cloud resources, including compute instances, networks, storage, databases, identity services, analytics, and more.

Compartments aid in organizing and controlling resource access. They function as collections of related resources, such as cloud networks, compute instances, or block volumes, accessible only to groups granted permission by organization administrators.

Each subnet within a VCN encompasses a non-overlapping, contiguous range of IPv4 or IPv6 addresses in the VCN. They define a common network environment for compute instances, like the route table, security lists, and DHCP options.

A Virtual Private Network (VPN) closely resembles a traditional network, equipped with firewall rules and specific communication gateways. It is confined to a single Oracle Cloud Infrastructure region and encompasses one or more CIDR blocks (both IPv4 and IPv6, if enabled).

OKE cluster

The OKE cluster serves as the cornerstone compute resource of the architecture, hosting the microservice-based applications.

The cluster is instantiated using the official Oracle OKE Terraform module, which significantly expedites the process of bootstrapping the cluster along with its associated components, thus saving considerable time. It orchestrates the creation of the VCN, Subnets, Network Security Groups, Node Pools, IAM roles and policies, Bastion and Operator hosts, as well as the Kubernetes cluster itself.

Oracle Cloud Infrastructure Container Engine for Kubernetes (OKE) is a managed Kubernetes service designed to streamline the operation of enterprise-grade Kubernetes deployments at scale. It minimizes the time, expense, and resources required to manage the intricacies of Kubernetes infrastructure. With Container Engine for Kubernetes, users can effortlessly deploy Kubernetes clusters and ensure dependable operations for both the control plane and worker nodes through automated scaling, upgrades, and security patching.

The cluster is instantiated using the official Oracle OKE Terraform module, which significantly expedites the process of bootstrapping the cluster along with its associated components, thus saving considerable time. It orchestrates the creation of the VCN, Subnets, Network Security Groups, Node Pools, IAM roles and policies, Bastion and Operator hosts, as well as the Kubernetes cluster itself.

Oracle Cloud Infrastructure Container Engine for Kubernetes (OKE) is a managed Kubernetes service designed to streamline the operation of enterprise-grade Kubernetes deployments at scale. It minimizes the time, expense, and resources required to manage the intricacies of Kubernetes infrastructure. With Container Engine for Kubernetes, users can effortlessly deploy Kubernetes clusters and ensure dependable operations for both the control plane and worker nodes through automated scaling, upgrades, and security patching.

Kubernetes networking

The worker nodes of the cluster reside within their own private subnet within the Virtual Cloud Network (VCN). This subnet hosts Finshape’s pods and deployments, along with Kyverno and Nginx Ingress Controller. Additionally, the Native Pod Networking addon serves as the Container Network Interface (CNI).

When utilizing the OCI VCN-Native Pod Networking CNI plugin, worker nodes are linked to two specified subnets for the node pool:

When utilizing the OCI VCN-Native Pod Networking CNI plugin, worker nodes are linked to two specified subnets for the node pool:

Worker Node Subnet:

This subnet facilitates communication between processes running on the cluster control plane (e.g., kube-apiserver, kube-controller-manager, kube-scheduler) and processes running on the worker node (e.g., kubelet, kube-proxy).

Pod Subnet:

This subnet supports communication between pods and allows direct access to individual pods via private pod IP addresses. It enables pods to communicate with other pods on the same worker node, pods on different worker nodes, OCI services (via a service gateway), and the internet (via a NAT gateway).

The Kubernetes API server managed by Oracle Cloud is situated within its own separate private subnet.

The API server is accessible via the operator host, which lacks direct internet connectivity. Instead, access to it is facilitated solely through a bastion host. The bastion service offers Secure Shell (SSH) access to private hosts in the cloud, ensuring secure communication channels.

Container images and Helm charts

Both container images and Helm charts are housed within the OCI Container Registry. This central repository facilitates the installation of charts using Terraform providers, while the Kubernetes cluster can access the images securely via the OCI Service Gateway using an ImagePullSecret.

Helm simplifies the process of defining, installing, managing, and upgrading even the most intricate Kubernetes applications. Think of it as a package manager tailored for Kubernetes.

The Finshape application is consistently delivered to customers through a Helm chart, ensuring easy tracking and debugging of its versions. By making minor adjustments in the values.yaml file, the solution can be effortlessly configured to meet the customer’s requirements.

A container image represents a streamlined, self-contained, executable bundle of software comprising all essential components to execute an application: code, runtime, system tools, libraries, and configurations. These images are used to instantiate containers within Kubernetes pods. The life cycle of these pods is regulated by Kubernetes workload management objects like deployments.

Helm simplifies the process of defining, installing, managing, and upgrading even the most intricate Kubernetes applications. Think of it as a package manager tailored for Kubernetes.

The Finshape application is consistently delivered to customers through a Helm chart, ensuring easy tracking and debugging of its versions. By making minor adjustments in the values.yaml file, the solution can be effortlessly configured to meet the customer’s requirements.

A container image represents a streamlined, self-contained, executable bundle of software comprising all essential components to execute an application: code, runtime, system tools, libraries, and configurations. These images are used to instantiate containers within Kubernetes pods. The life cycle of these pods is regulated by Kubernetes workload management objects like deployments.

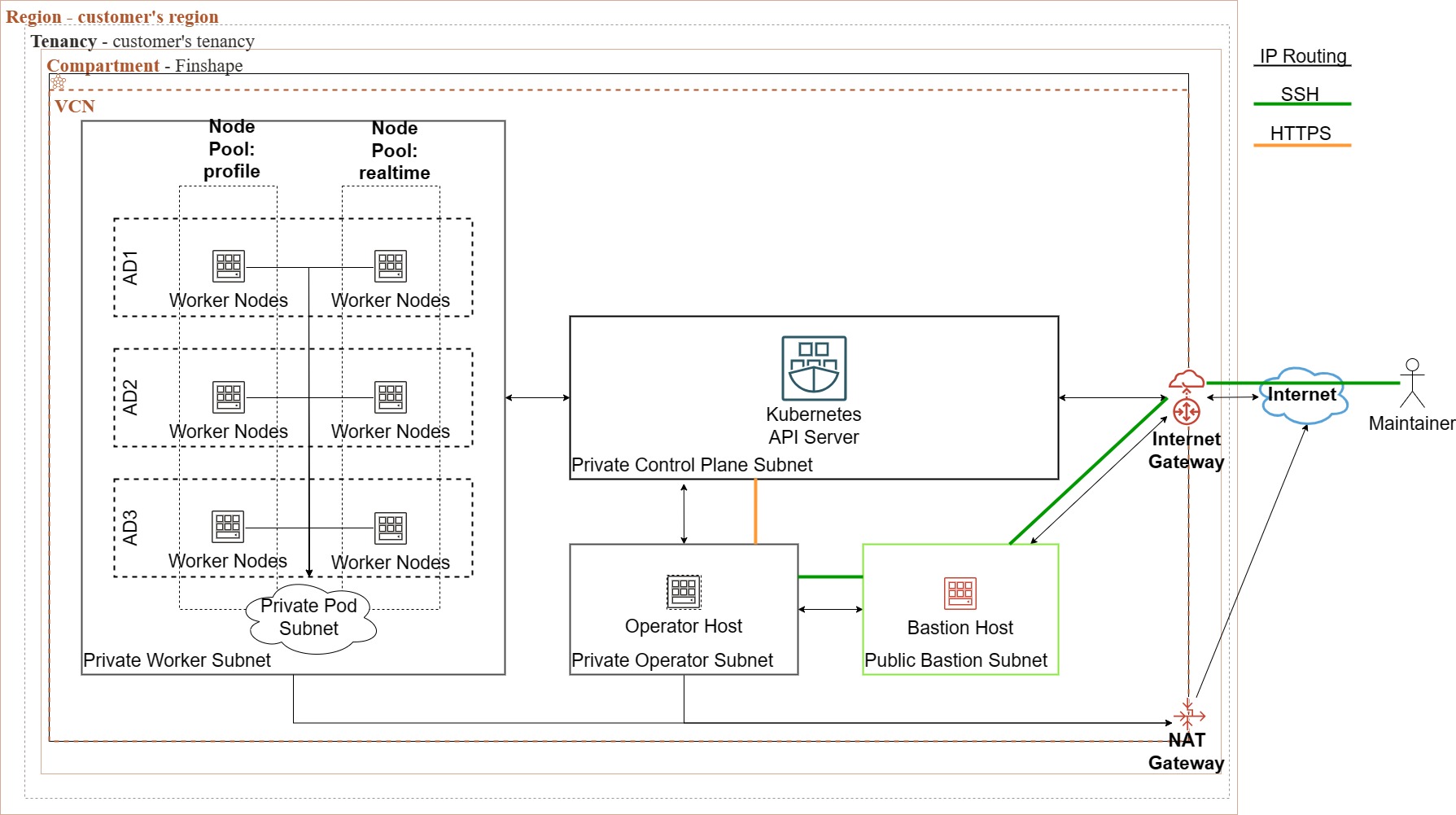

Logging Analytics

The setup for Logging Analytics is implemented using a meticulously maintained module by Oracle. It deploys the log and metrics gathering daemon on the cluster and its nodes, alongside the requisite dashboards for cluster monitoring and the necessary IAM roles to enable the daemon to transmit the logs to the designated log group.

Fluentd, an open-source data collector, gathers logs to streamline data collection and consumption for improved utilization and comprehension. The Management Agent, a service facilitating low-latency interactive communication and data gathering, collects metrics from various targets on Oracle Cloud Infrastructure.

Fluentd, an open-source data collector, gathers logs to streamline data collection and consumption for improved utilization and comprehension. The Management Agent, a service facilitating low-latency interactive communication and data gathering, collects metrics from various targets on Oracle Cloud Infrastructure.

These components continuously collect and send data to Logging Analytics.

Oracle Cloud Infrastructure Logging Analytics is a cloud service driven by machine learning. It monitors, aggregates, indexes, and analyses log data from both on-premises and multi-cloud environments. This enables users to efficiently search, explore, and correlate data, speeding up troubleshooting and issue resolution. Additionally, it provides insights to support better operational decision-making.

Oracle Cloud Infrastructure Logging Analytics is a cloud service driven by machine learning. It monitors, aggregates, indexes, and analyses log data from both on-premises and multi-cloud environments. This enables users to efficiently search, explore, and correlate data, speeding up troubleshooting and issue resolution. Additionally, it provides insights to support better operational decision-making.

Sensitive data

Passwords and secrets are securely stored in a Vault. Terraform always injects these credentials during creation or update directly from Vault, ensuring their confidentiality throughout the Terraform plan and apply phases.

Vault is an encryption service that enables to control the keys that are hosted in Oracle Cloud Infrastructure (OCI) hardware security modules (HSMs) while Oracle administers the HSMs.

OAuth2.0

User authentication is conducted via OAuth 2.0. While in AWS the integration is done with Amazon Cognito, in our context, we utilized OCI IAM Identity Domains.

Customers’ users already have their own accounts in another application. When an authenticated user begins using Finshape’s solution from the aforementioned application, the system will create a new user for the first time in our infrastructure and grant the necessary privileges. If the user already exists and is authenticated in the other application, they will also be authenticated here.

OAuth 2.0 is the industry-standard protocol for authorization. The OAuth 2.0 authorization framework enables a third-party application to obtain limited access to an HTTP service, either on behalf of a resource owner by orchestrating an approval interaction between the resource owner and the HTTP service, or by allowing the third-party application to obtain access on its own behalf.

Oracle cloud infrastructure console and the identity and access management (OCI IAM) provide out-of-the-box OAuth Services, which allows a Client Application to access protected resources that belong to an end-user.

Customers’ users already have their own accounts in another application. When an authenticated user begins using Finshape’s solution from the aforementioned application, the system will create a new user for the first time in our infrastructure and grant the necessary privileges. If the user already exists and is authenticated in the other application, they will also be authenticated here.

OAuth 2.0 is the industry-standard protocol for authorization. The OAuth 2.0 authorization framework enables a third-party application to obtain limited access to an HTTP service, either on behalf of a resource owner by orchestrating an approval interaction between the resource owner and the HTTP service, or by allowing the third-party application to obtain access on its own behalf.

Oracle cloud infrastructure console and the identity and access management (OCI IAM) provide out-of-the-box OAuth Services, which allows a Client Application to access protected resources that belong to an end-user.

Cloud Agnostic possibility

An alternative solution for adopting a cloud-agnostic design is Keycloak. Developed by Red Hat, Keycloak is an open-source identity and access management tool. Red Hat’s blog defines Keycloak as a single sign-on solution for both web apps and RESTful web services. Its primary objective is to simplify the process of securing applications and services for developers. Keycloak supports nearly all standard IAM protocols, including OAuth 2.0, OpenID, and SAML. Thus, it presents a viable alternative to Oracle Cloud Infrastructure’s OAuth 2.0 solution. Keycloak can be conveniently installed on the same cluster as the application using a Helm chart.

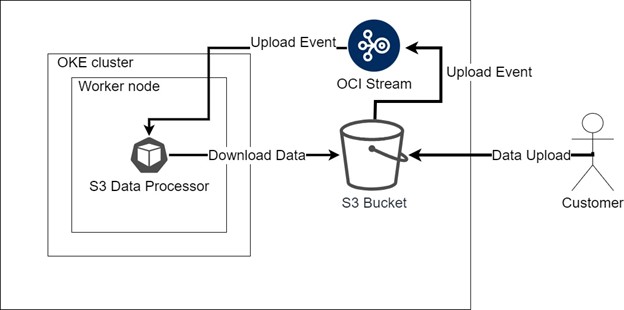

Data for processing

Data intended for processing by Finshape’s service is stored in S3 compatible OCI Object Storage buckets. Whenever a customer uploads data to the bucket, the application is promptly notified through streams and event rules.

With the Amazon S3 Compatibility API, developers can seamlessly utilize their current Amazon S3 tools, such as SDK clients, with minimal adjustments to their applications for Object Storage integration. Both the Amazon S3 Compatibility API and Object Storage datasets are aligned, ensuring smooth interoperability. Data written to Object Storage through the Amazon S3 Compatibility API can be retrieved using the native Object Storage API, and vice versa.

With the Amazon S3 Compatibility API, developers can seamlessly utilize their current Amazon S3 tools, such as SDK clients, with minimal adjustments to their applications for Object Storage integration. Both the Amazon S3 Compatibility API and Object Storage datasets are aligned, ensuring smooth interoperability. Data written to Object Storage through the Amazon S3 Compatibility API can be retrieved using the native Object Storage API, and vice versa.

The Oracle Cloud Infrastructure Streaming service offers a comprehensive solution for handling large-scale data streams in real-time. It is fully managed, scalable, and resilient, ensuring reliable ingestion and consumption of high-volume data streams. Streaming is suitable for various scenarios where data is generated and processed continuously and sequentially, following a publish-subscribe messaging approach. Both producers and consumers can leverage Streaming as an asynchronous message bus, allowing them to operate independently and at their own pace.

Oracle Cloud Infrastructure services generate structured messages called events, signalling alterations in resources. These events adhere to the CloudEvents industry standard format managed by the Cloud Native Computing Foundation (CNCF). This standard facilitates compatibility across different cloud providers and between on-premises systems and cloud services. Events may represent various actions such as create, read, update, or delete (CRUD) operations, changes in resource life cycle states, or system events affecting a resource. For instance, an event might be triggered when a file within an Object Storage bucket is added, modified, or removed.

Oracle Cloud Infrastructure Streaming enables users of Apache Kafka to avoid the burdens of setting up, maintaining, and managing infrastructure, which are typically associated with hosting your own Zookeeper and Kafka cluster.

Streaming seamlessly integrates with the majority of Kafka APIs, allowing applications originally designed for Kafka to transmit and receive messages through the Streaming service without needing to rewrite your existing code. This significantly facilitates cloud-agnostic solutions.

Oracle Cloud Infrastructure services generate structured messages called events, signalling alterations in resources. These events adhere to the CloudEvents industry standard format managed by the Cloud Native Computing Foundation (CNCF). This standard facilitates compatibility across different cloud providers and between on-premises systems and cloud services. Events may represent various actions such as create, read, update, or delete (CRUD) operations, changes in resource life cycle states, or system events affecting a resource. For instance, an event might be triggered when a file within an Object Storage bucket is added, modified, or removed.

Oracle Cloud Infrastructure Streaming enables users of Apache Kafka to avoid the burdens of setting up, maintaining, and managing infrastructure, which are typically associated with hosting your own Zookeeper and Kafka cluster.

Streaming seamlessly integrates with the majority of Kafka APIs, allowing applications originally designed for Kafka to transmit and receive messages through the Streaming service without needing to rewrite your existing code. This significantly facilitates cloud-agnostic solutions.

IAM and Instance Principals

Operators are granted access to OCI services via IAM policies. While operators have a well-defined identity, computational elements get authorized with instance principals to be able to perform actions within OCI services.

Instance principals represent a feature within Oracle Cloud Infrastructure Identity and Access Management (IAM) enabling service calls from an instance. This feature eliminates the necessity of configuring user credentials on compute instance services. Instances themselves serve as a principal type in IAM, each possessing its unique identity authenticated via certificates incorporated into the instance. These certificates are automatically generated, assigned, and rotated for instances. Instance principals are utilized to authorize instances for API or CLI interactions with Oracle Cloud Infrastructure S3 Buckets and events.

Instance principals represent a feature within Oracle Cloud Infrastructure Identity and Access Management (IAM) enabling service calls from an instance. This feature eliminates the necessity of configuring user credentials on compute instance services. Instances themselves serve as a principal type in IAM, each possessing its unique identity authenticated via certificates incorporated into the instance. These certificates are automatically generated, assigned, and rotated for instances. Instance principals are utilized to authorize instances for API or CLI interactions with Oracle Cloud Infrastructure S3 Buckets and events.

Database

Processed data is stored in a MySQL DB System provided by Oracle. Leveraging the OCI Terraform provider, we were able to configure the entire system using a single Terraform resource.

The MySQL Database Service is an Oracle Cloud Infrastructure native service that is fully managed, developed, and supported by the MySQL team at Oracle. Oracle automates essential tasks such as backup and recovery, as well as database and operating system patching.

The MySQL DB System with high availability (HA) comprises three MySQL instances spread across various availability or fault domains. Data replication is facilitated through MySQL Group Replication. The application interacts with a unified endpoint for both reading and writing data into the database. Should a failure occur, the DB System seamlessly switches over to a secondary instance, eliminating the need for reconfiguring the application.

The MySQL Database Service is an Oracle Cloud Infrastructure native service that is fully managed, developed, and supported by the MySQL team at Oracle. Oracle automates essential tasks such as backup and recovery, as well as database and operating system patching.

The MySQL DB System with high availability (HA) comprises three MySQL instances spread across various availability or fault domains. Data replication is facilitated through MySQL Group Replication. The application interacts with a unified endpoint for both reading and writing data into the database. Should a failure occur, the DB System seamlessly switches over to a secondary instance, eliminating the need for reconfiguring the application.

Load Balancing

The API of the application is made accessible via the Nginx Ingress Controller in the OKE cluster. Then the traffic is balanced across the nodes with an OCI Network Load Balancer.

The Load Balancer service automates the distribution of traffic from a single-entry point to multiple servers accessible within your virtual cloud network (VCN). Additionally, it improves resource utilization, streamlines scalability, and aids in maintaining high availability.

The Load Balancer service automates the distribution of traffic from a single-entry point to multiple servers accessible within your virtual cloud network (VCN). Additionally, it improves resource utilization, streamlines scalability, and aids in maintaining high availability.

Summary

In summary, we have successfully executed the migration of Finshape’s Money Stories service from AWS to Oracle Cloud Infrastructure. With the migration complete, Finshape is well-positioned to continue offering their banking solution cost-effectively on Oracle Cloud.

All original AWS-based services have been seamlessly replaced with Oracle Cloud alternatives, maintaining full functionality. Furthermore, the entire infrastructure is managed by Terraform, making it significantly easier to maintain, upgrade, and monitor changes within the infrastructure. In the event of configuration errors, rolling back to a previous working state is also simpler. Provisioning new environments, such as test and development environments, is now effortlessly and efficiently achieved, mirroring production environments.

The pipeline operates with three distinct phases, aiding developers in identifying and preventing errors before implementing any changes in the production environment. The manual approval phase provides an opportunity for thorough verification of all changes, ensuring greater control and oversight.

The OKE cluster, with nodes deployed across multiple availability zones, effortlessly manages failovers in the event of availability zone failures. Kubernetes, through its dynamic orchestration, can seamlessly recreate faulted pods and nodes in alternate zones during a disaster.

Container images and Helm charts provide an excellent opportunity to upgrade the application’s version seamlessly with rolling upgrades, ensuring zero downtime. In the event of application-level errors, the entire microservice-based solution can be rolled back to the previous working state.

Logging Analytics enables us to collect and aggregate logs and metrics from the architecture into a centralized solution, providing comprehensive insight into the system’s current state. This facilitates more effective debugging in the event of unexpected states, enhancing operational efficiency.

Sensitive data can be securely stored in a vault accessible only to Terraform during pipeline execution. All stored secrets are encrypted and can be easily rotated at high frequencies for enhanced security measures.

The S3 bucket helps to securely encrypt and store customer data, making it available in any availability zone for the application supporting high availability. The streaming and event features provide a low-latency solution for receiving notifications about file uploads, allowing processing to begin promptly.

Instance Principals facilitate API calls and CLI commands through instance-based authentication, eliminating the need to concern ourselves with storing or configuring credentials on the application side. This capability allows for seamless execution anywhere, ensuring that as long as the pod is operational on the designated server, it will retain access to the S3 bucket.

The Oracle-maintained database system offers significant advantages. There’s no requirement for patching or managing backup execution intricacies; restoration is straightforward. The fault-tolerant nature of the database system meets our requirements for high availability and resilience to availability zone failures.

The Load Balancer, a service provided by Oracle Cloud, ensures high availability of the application endpoints. If one zone or even one node becomes unavailable, traffic can be seamlessly redirected to other operational zones. Additionally, on the Kubernetes layer, the Nginx Ingress controller guarantees that traffic is directed to healthy endpoints.

All original AWS-based services have been seamlessly replaced with Oracle Cloud alternatives, maintaining full functionality. Furthermore, the entire infrastructure is managed by Terraform, making it significantly easier to maintain, upgrade, and monitor changes within the infrastructure. In the event of configuration errors, rolling back to a previous working state is also simpler. Provisioning new environments, such as test and development environments, is now effortlessly and efficiently achieved, mirroring production environments.

The pipeline operates with three distinct phases, aiding developers in identifying and preventing errors before implementing any changes in the production environment. The manual approval phase provides an opportunity for thorough verification of all changes, ensuring greater control and oversight.

The OKE cluster, with nodes deployed across multiple availability zones, effortlessly manages failovers in the event of availability zone failures. Kubernetes, through its dynamic orchestration, can seamlessly recreate faulted pods and nodes in alternate zones during a disaster.

Container images and Helm charts provide an excellent opportunity to upgrade the application’s version seamlessly with rolling upgrades, ensuring zero downtime. In the event of application-level errors, the entire microservice-based solution can be rolled back to the previous working state.

Logging Analytics enables us to collect and aggregate logs and metrics from the architecture into a centralized solution, providing comprehensive insight into the system’s current state. This facilitates more effective debugging in the event of unexpected states, enhancing operational efficiency.

Sensitive data can be securely stored in a vault accessible only to Terraform during pipeline execution. All stored secrets are encrypted and can be easily rotated at high frequencies for enhanced security measures.

The S3 bucket helps to securely encrypt and store customer data, making it available in any availability zone for the application supporting high availability. The streaming and event features provide a low-latency solution for receiving notifications about file uploads, allowing processing to begin promptly.

Instance Principals facilitate API calls and CLI commands through instance-based authentication, eliminating the need to concern ourselves with storing or configuring credentials on the application side. This capability allows for seamless execution anywhere, ensuring that as long as the pod is operational on the designated server, it will retain access to the S3 bucket.

The Oracle-maintained database system offers significant advantages. There’s no requirement for patching or managing backup execution intricacies; restoration is straightforward. The fault-tolerant nature of the database system meets our requirements for high availability and resilience to availability zone failures.

The Load Balancer, a service provided by Oracle Cloud, ensures high availability of the application endpoints. If one zone or even one node becomes unavailable, traffic can be seamlessly redirected to other operational zones. Additionally, on the Kubernetes layer, the Nginx Ingress controller guarantees that traffic is directed to healthy endpoints.